Sharing Configuration Between WebJobs

29 Aug 2015 | Azure

The project I am working on started out with the single webjob and has since grown to multiple jobs running in parallel. They are all hosted within a dedicated web app, which allows us to scale the jobs independent of the rest of the application. And because they share a single container there is the added side effect of the jobs sharing all the Application Settings and connection strings too.

While each webjob had its own class library, I didn't want to maintain multiple copies of the App.Config file. I decided to share the the common bits (AppSettings and ConnectionString sections) in their own files:

In one of the webjob projects, I moved the

AppSettingsandConnectionStringsinto their own.configfiles -appSettings.configandconnectionStrings.configrespectively.Next, I referenced them back to the

App.configusing theconfigSourceattribute.Finally, I added the same files as linked files to the otherweb jobs and set their

Copy to Output Directoryfile property toCopy Always.

This works well enough, but for one caveat - which prompted me to write this post in the first place. The problem is that the Web Deploy Package publishing process does not appear to honor folder structures for the config files. That means that if you've separated the configuration into sub folders (like shown below), the publishing process would flatten it out.

Enable SSRS Remote Errors in SharePoint Integrated Mode

28 Aug 2015 | SharePoint | SQL Server Reporting Services

Any time I have to troubleshoot issues in SQL Server Reporting Services (SSRS) reports in a production environment, I usually end up enabling Remote Errors at some point as part my process.

Remote errors are enabled via the SSRS Service Application:

- Navigate to

Central Administration > Application Management > Manage Service Applications - Next, click on the appropriate

SQL Server Reporting Services Service Applicationservice application to manage it. - Click

System Settingsfrom the toolbar. - Finally, enable remote errors by navigating into checking the

Enable Remote Errorscheckbox.

Entity Framework Namespace Collisions When Working with Multiple Contexts

03 Jun 2015 | Entity Framework | SQL Server

I came across the following exception whilst attempting working with a solution that contained a couple of Entity Framework (EF) 6 database contexts.

System.Data.Entity.Core.MetadataException

Schema specified is not valid. Errors:

The mapping of CLR type to EDM type is ambiguous because multiple CLR types match the EDM type 'Setting'. Previously found CLR type 'SqlHelper.Primary.Setting', newly found CLR type 'SqlHelper.Secondary.Setting'.

at System.Data.Entity.Core.Metadata.Edm.ObjectItemCollection.LoadAssemblyFromCache(Assembly assembly, Boolean loadReferencedAssemblies, EdmItemCollection edmItemCollection, Action`1 logLoadMessage)

at System.Data.Entity.Core.Metadata.Edm.ObjectItemCollection.ExplicitLoadFromAssembly(Assembly assembly, EdmItemCollection edmItemCollection, Action`1 logLoadMessage)

at System.Data.Entity.Core.Metadata.Edm.MetadataWorkspace.ExplicitLoadFromAssembly(Assembly assembly, ObjectItemCollection collection, Action`1 logLoadMessage)

at System.Data.Entity.Core.Metadata.Edm.MetadataWorkspace.LoadFromAssembly(Assembly assembly, Action`1 logLoadMessage)

at System.Data.Entity.Core.Metadata.Edm.MetadataWorkspace.LoadFromAssembly(Assembly assembly)

at System.Data.Entity.Internal.InternalContext.TryUpdateEntitySetMappingsForType(Type entityType)

at System.Data.Entity.Internal.InternalContext.UpdateEntitySetMappingsForType(Type entityType)

at System.Data.Entity.Internal.InternalContext.GetEntitySetAndBaseTypeForType(Type entityType)

at System.Data.Entity.Internal.Linq.InternalSet`1.Initialize()

at System.Data.Entity.Internal.Linq.InternalSet`1.get_InternalContext()

at System.Data.Entity.Internal.Linq.InternalSet`1.ActOnSet(Action action, EntityState newState, Object entity, String methodName)

at System.Data.Entity.Internal.Linq.InternalSet`1.Add(Object entity)

at System.Data.Entity.DbSet`1.Add(TEntity entity)

at MultiContextConsoleApp.Program.Main(String[] args) in e:\shane\Projects\Orca\Sandbox\MultiContextConsoleApp\MultiContextConsoleApp\Program.cs:line 16

at System.AppDomain._nExecuteAssembly(RuntimeAssembly assembly, String[] args)

at System.AppDomain.ExecuteAssembly(String assemblyFile, Evidence assemblySecurity, String[] args)

at Microsoft.VisualStudio.HostingProcess.HostProc.RunUsersAssembly()

at System.Threading.ThreadHelper.ThreadStart_Context(Object state)

at System.Threading.ExecutionContext.RunInternal(ExecutionContext executionContext, ContextCallback callback, Object state, Boolean preserveSyncCtx)

at System.Threading.ExecutionContext.Run(ExecutionContext executionContext, ContextCallback callback, Object state, Boolean preserveSyncCtx)

at System.Threading.ExecutionContext.Run(ExecutionContext executionContext, ContextCallback callback, Object state)

at System.Threading.ThreadHelper.ThreadStart()

Generate a Clean Up Script to Drop All Objects in a SQL Server Database

02 Jun 2015 | SQL Server

I needed a quick and reusable way to drop all SQL server objects from an Azure database. The objective was to have some kind of process to clean up and prep the database before the main deployment is kicked off. And given that I am particularly biased towards using a sql script my search for a solution focused around it.

In addition to actually dropping the artifacts, the script should be aware of the order in which it should do it - that is to drop the most dependent objects first and work its way towards the least dependent ones. And my nice-to-have feature is to be able to parameterize the schema name so that it could be used with a multi-tenant database schema.

I saw a few possible solutions and finally settled on using the out-of-the-box feature that's already available through SQL Server Management Studio (SSMS).

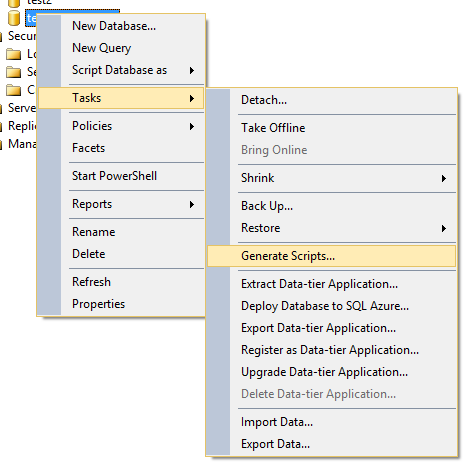

- Open up SQL Server Management Studio.

Select

Task > Generate Script...on on your the database context menu. This would open up theGenerate and Publish Scriptsdialog.

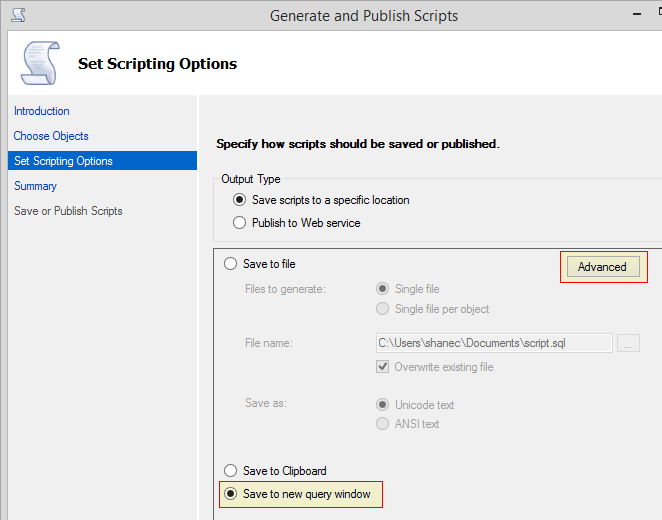

First, navigate to the

Choose Objectstab and select all the objects that need to be dropped.Next, on the

Set Scripting Optionstab, select the preferred output location.

Next, click the

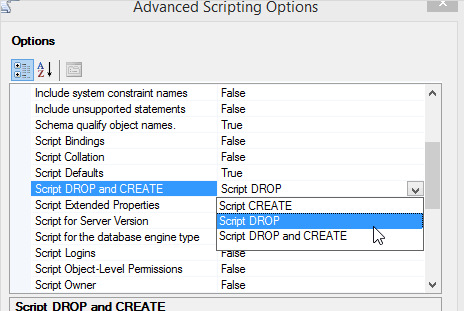

Advancedbutton which result in theAdvanced Scripting Optionsdialog.

Navigate down towards to and change the

General > Script DROP and CREATEoption toScript DROP.- Set the default values for the rest of the steps and finally click the

Finishbutton.

Recording Diagnostics on a Azure App Service Hosted Website using Log4Net

I've been working on moving an existing web based software solution into the Azure cloud ecosystem. The solution is tightly integrated with and uses Log4Net as it logging framework. My primary goal, in terms of logging, was to keep as much of my original architecture intact and at the same time make maximum use of the diagnostics infrastructure that is available in Azure.

The official documentation states that calls to the System.Diagnostics.Trace methods are all that is required to start capturing diagnostic information. In summary, this is all I needed to do:

- Enable diagnostics and configure the storage locations (discussed later down the post).

- From within my code write the

Warning,ErrorandInformationmessages via their respective trace methods. - ...

- Azure starts capturing the custom diagnostics information - PROFIT!

Sounds simple enough.

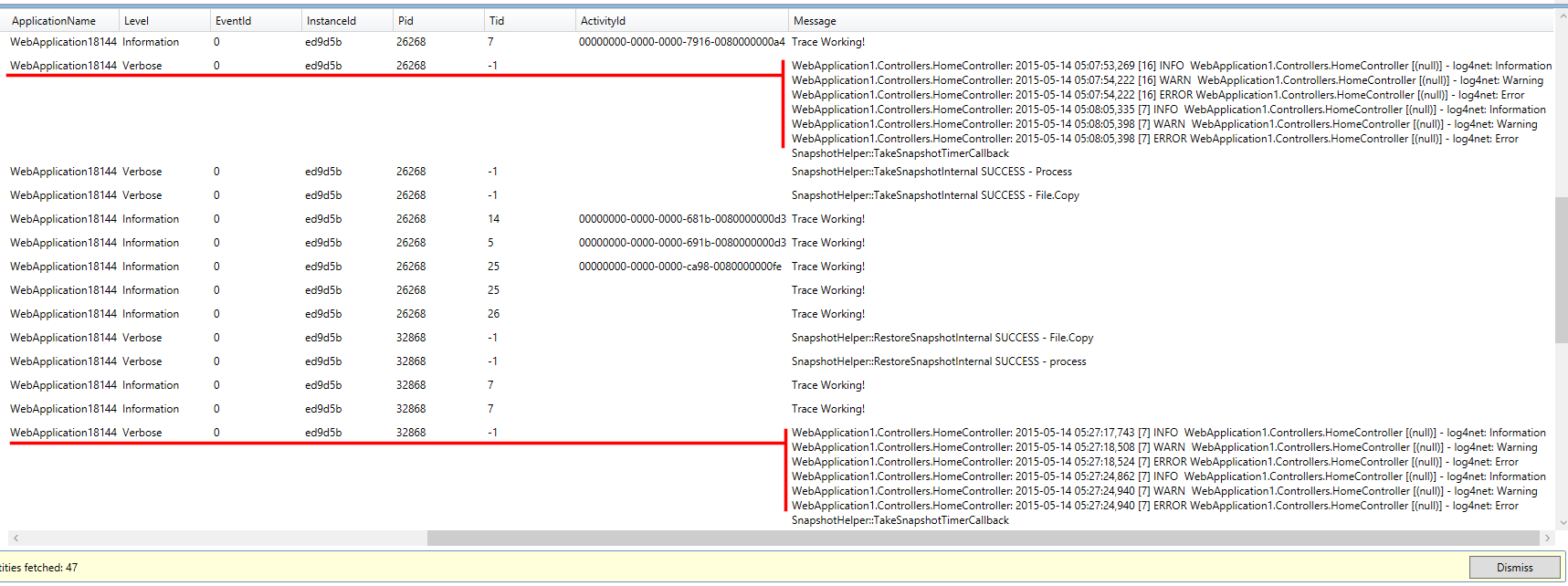

So I thought if I just set up a TraceAppender everything would work fine and that would be the end of it. The results were not what I was expecting and this was the output in my table storage:

The trace entries are bunched together as a single Verbose entry and the writes appear to be buffered. Not acceptable. I suppose the buffering could be because I had not used the ImmediateFlush option for the TraceAppender, but I need to have each Trace statement to have its own entry in the table.

While there are a lot of posts on the internet on how to setup Log4Net with Azure, most of them appear to be out of date and seem to be compensating for features were not available in the Azure at the time of their implementation. Then there are others that targeted towards integrating with the Cloud Service which is not what I was looking for.

Start Azure Web Jobs On Demand

18 May 2015 | Azure | PowerShell | REST

I've been working on an Azure based solution recently and have been using the free tiers to quickly get the solution up and running and to perform the first few QA cycles. The core solution is based around single app service website and then a second website that acts as the host for a continuous web job which is triggered via a queue. The problem with the free tiers is that there's a high possibility that the web job would shut itself down and hibernate if it is idle for more that 20 mins:

As of March 2014, web apps in Free mode can time out after 20 minutes if there are no requests to the scm (deployment) site and the web app's portal is not open in Azure. Requests to the actual site will not reset this.

A little research shows a few possible solutions:

- If the job is not time sensitive, then manually start the service remotely using a script or tool.

- Make your code explicitly start the web job just as a new request is being enqueued. This can be done by making a REST call to the deployment site.

- And lastly, upgrade to a basic or standard tier and enabling "Always On" keeps the site (and jobs) "warm" and prevent them from hibernating.

Parameterize Schema Name within a SSDT Database Project for Multi-Tenant Solutions

09 May 2015 | SQL Server | SQL Server Data Tools

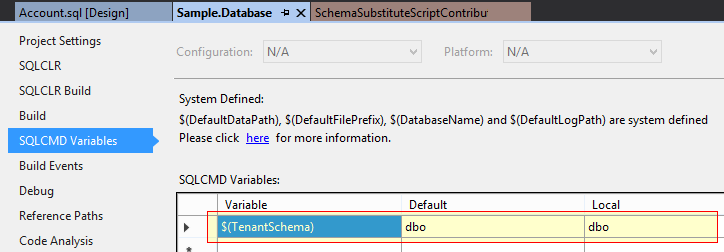

I've been working on an multi-tenant solution recently and have been trying to come up with an efficient way to manage the database deployment and upgrade. The database is designed to segregate each tenant's data under its own schema namespace as such I need to generate a re-useable script that can be deployed against each tenant. The approach I am going to take is to first source control the database schema within a SQL Server Data Tools (SSDT) database project and then use it to generate the script that can be parameterized with the tenant information.

I first parameterized the the schema name as a SQLCMD variable - $TenantName:

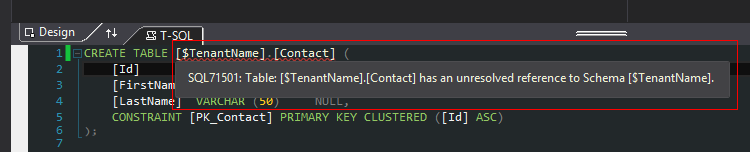

Next I tried to replace the schema name with the new variable, but this did not work as trying to build the solution now returns with a 71502 error as the project is no longer able to resolve and validate schema objects.

SQLCMD does not have any complaints if I replace the [dbo]. with [$TenantName] in the generated script so its the SSDT project that is attempting to maintain the integrity of database.

One possible way to overcome this is to suppress the 71502 by turning them into warnings. The disadvantage in this approach is that you loose the rich validation in exchange for something that is essentially a deployment convenience.

Another duct tape and bubble gum approach would be to just have some kind of post deployment operation that does a find and replace on the schema name. Sure it would work, but that's not going to be reliable in the long run.

A little bit of research reveals that the proper way to alter the creation of deployment script process is to create a deployment plan modifier. A deployment plan modifier is essentially a class that inherits DeploymentPlanModifier and allows you to inject custom actions when deploying a SQL project. There does not seem to be much formal documentation on the process, so I relied a lot on this article in MSDN, the sample DACExtensions and what forum posts I could find. So with a lot of trial and error I wrote my own plan modifier that would replace the schema identifiers when the database project is published.

Disabling the SharePoint Search Service

11 Mar 2015 | SharePoint | Windows

If left unchecked, the SharePoint search service to attempts to consume most of the available memory in a resource constrained development box. I usually disable it when I am not actively working with it.

This seems to be most effective way to completely disable the service. Although changing the password to an invalid one works as expected, it is important to replace the user account from a domain to a local user account as well. If not, the constant invalid login attempts could trigger your account lock out threshold policy in the domain.

So for convenience sake I created two batch files - one to disable the service and another to bring it back up.

Create an Elevated Command Prompt Running Under a Different User Context

07 Mar 2015 | Security

User Account Control (UAC) is a security mechanism that is available in most modern versions of Windows. It restricts the ability to make changes to a computer environment without the explicit consent of an administrator. And as is with most production environments, it is very likely that this feature is already enabled. And just as likely, is the need to execute applications and commands under a different user within an elevated context during application deployment or maintenance.

This is achieved by using the runas command with the /noprofile flag. The command below creates an elevated command line running under the wunder\admin user:

runas /noprofile /user:wunder\admin cmd

Now, all commands executed within this new command prompt would be in the same elevated status.

Creating a Self-Signed Wild Card SSL Certificate for Your Development Environment

05 Mar 2015 | Security

Secure Socket Layer (SSL) is a security standard used to ensure secure communication between a web server and browser and used in most modern web application. As a developer it is prudent to setup your development environment to closely resemble production as much as possible, including security concerns. However, getting a full fledged CA SSL certificate for you development environment might not be the most cost-effective solution. Therefore post summarizes the steps I take to create a self signed wild card certificate to be used in the internal environments. My guide is based on this excellent post.

Create the Certificate

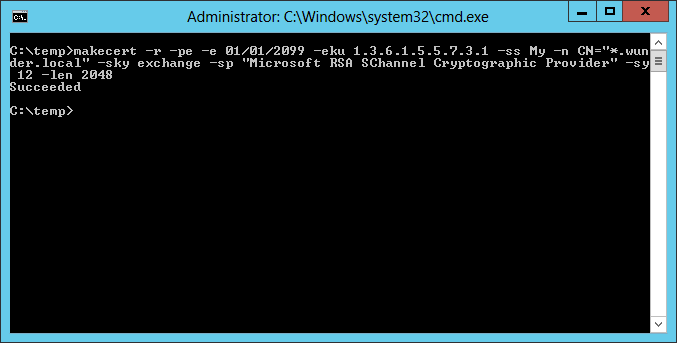

In order to create the certificate we would be using the MakeCert.exe tool which can be found at C:\Program Files (x86)\Windows Kits\8.1\bin\x64\. This command creates the certificate and adds it to the logged in user's personal certificate store:

makecert -r -pe -e 01/01/2099 -eku 1.3.6.1.5.5.7.3.1 -ss My -n CN="*.wunder.local" -sky exchange -sp "Microsoft RSA SChannel Cryptographic Provider" -sy 12 -len 2048

Some of the notable flags:

- -r - Indicates that we're creating a self-signed certificate.

- -pe - Includes the private key in the certificate and makes it exportable.

- -e - The validity period of the certificate.

-n - The subject's certificate name - specify the wildcard url.